Cross-industry semantic interoperability, part one

June 29, 2017

Blog

This multi-part series addresses the need for a single semantic data model supporting the Internet of Things (IoT) and the digital transformation of buildings, businesses, and consumers. Such a...

This multi-part series addresses the need for a single semantic data model supporting the Internet of Things (IoT) and the digital transformation of buildings, businesses, and consumers. Such a model must be simple and extensible to enable plug-and-play interoperability and universal adoption across industries.

Navigate to other parts of the series here:

- Cross-industry semantic interoperability: Glossary

- Cross-industry semantic interoperability, part two: Application-layer standards and open-source initiatives

- Cross-industry semantic interoperability, part three: The role of a top-level ontology

- Cross-industry semantic interoperability, part four: The intersection of business and device ontologies

- Cross-industry semantic interoperability, part five: Towards a common data format and API

IoT network abstraction layers and degrees of interoperability

Interoperability, or the ability of computer systems or software to exchange or make use of information[1], is a requirement of all devices participating in today’s information economy. Traditionally, interoperability has been defined mostly in the context of network communications. But with millions of devices being connected in industries ranging from smart home and building automation to smart energy and retail to healthcare and transportation, a broader definition is now required that considers the cross-domain impact of interoperability on system-to-system performance.

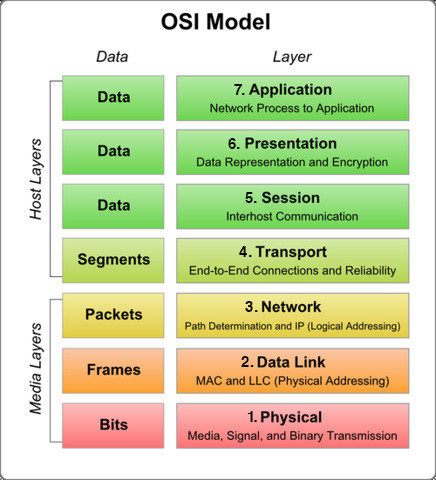

Perhaps the best-known example of a framework for defining network interoperability is provided by the Open System Interconnection (OSI) model, which serves as the foundation of telecommunications networks worldwide. The OSI model provides a framework for interoperability through seven distinct abstraction layers that isolate and define various communications functions, from the transmission of bits in physical media (Layer 1) through the sharing of application data in software (Layer 7). A brief description of these layers and their purpose can be found in Figure 1.

[Figure 1 | The OSI model outlines seven abstraction layers that facilitate interoperability in telecommunications and computing networks.]

While each abstraction layer of the OSI model contributes to overall network interoperability, each does so by addressing one of three categories of interoperability defined by the Virginia Modeling Analysis and Simulation Center’s Levels of Conceptual Interoperability Model (LCIM)[2]. These three categories are technical interoperability, syntactic interoperability, and semantic interoperability[3]:

- Technical interoperability is the fundamental ability of a network to exchange raw information of any kind. Technical interoperability is managed by lower levels of the OSI stack (Layers 1 – 4), which define the infrastructure for reliably transmitting and receiving data between points on a network.

- Syntactic interoperability, or the ability to exchange structured data between two or more machines, is typically handled by Layers 5 and 6 of the OSI model. Here, standard data formats such as XML and JSON provide syntax that allows systems to recognize the type of data being transmitted or received.

- Semantic interoperability enables systems to interpret meaning from structured data in a contextual manner (often through the use of metadata), and is implemented in Layer 7 of the OSI stack.

In the OSI framework, the correct implementation of each abstraction layer contributes to an interoperability waterfall, with technical interoperability enabling syntactic interoperability, which in turn enables semantic interoperability. Technical interoperability is currently well understood and standardized across multi-domain communications networks, leaving the syntactic and semantic layers as the key addressable points for truly interoperable machine-to-machine (M2M) data communications.

The Transition from Syntactic to Semantic Interoperability

Where Layers 1 through 4 of the OSI model provide a suite of agnostic Internet Protocol-based (IP-based) network infrastructure technologies, syntactic and semantic interoperability often rely on industry-specific formats and protocols optimized based on the type of systems and data at hand. This fact is further complicated by billions of dollars of investment in existing network infrastructure to support M2M communications in these vertical markets[4].

To facilitate broad syntactic interoperability amidst these circumstances, the Industrial Internet Consortium (IIC) recently published the “Industrial Internet Connectivity Framework,” or IICF[3]. The IICF redefines the traditional OSI model by combining the Presentation and Session layers (Layers 5 and 6) to provide all of the necessary mechanisms to “facilitate how data is unambiguously structured and parsed by the endpoints” (Figure 2). Cross-industry syntactic interoperability is supported by a set of “core connectivity standards” (currently the Data Distribution Service (DDS), OPC-Unified Architecture (OPC-UA), oneM2M, and web services) that communicate through a proposed set of standardized gateways.

[Figure 2 | The Industrial Internet Consortium’s Connectivity Framework layer provides the foundation for syntactic interoperability across disparate systems and domains.]

The IICF framework layer allows for states, events, and streams to be transmitted between applications at varying quality of service (QoS) levels. Such architectures sufficiently fulfill the requirements of syntactic interoperability.

Beyond the syntactic interoperability layer of the IICF lies the Information Layer (the Application Layer in the OSI model), where semantic interoperability has yet to be specified. The distributed data management and interoperability that occurs here relies on a designated ontology between two or more machines to automatically and accurately interpret the meaning (context) of exchanged data and apply it to a valuable end. As suggested by the IICF’s approach to syntactic interoperability, this ontology must account for metadata exchanged between disparate systems and environments. It represents the highest level of interoperability between connected systems.

Several industry organizations have endeavored to implement semantic data models (information models) that encompass the widest possible spectrum of industries and systems. These consortia include the Object Management Group (OMG), IPSO Alliance, Open Connectivity Foundation (OCF), the Open Group, zigbee, Global Standards 1 (GS1), Schema.org, Project Haystack, and others. However, they have been largely unsuccessful in realizing semantic data schemes that are applicable to the broad-based, cross-industry use cases because their experience tends to be based on narrow sets of technology or industry segments.

The following article series describes how the best attributes of each approach can be leveraged to achieve scalable semantic interoperability across multiple industries and environments.

Describing the meaning of data – Data semantics

Data from sensors to actuators

The Internet of Things (IoT) is changing our world, affecting the way we manage and operate environments such as homes, buildings, stores, hospitals, factories, and cities. Lower cost sensors, more powerful controllers, the “cloud,” smart equipment, and new software applications are enabling new approaches to managing everything from facilities to supply chains.

Smart devices are creating dramatic increases in the amount and type of data available from our environments, while new software applications are creating new ways to benefit from that data. Together these advances are driving a fundamental shift in how we manage and operate those environments, enabling us to move from traditional control strategies based on simple feedback loops to data-driven methodologies that inform parties about the actual performance of smart devices and systems in real time.

All of these trends can help improve efficiency and drive savings in overall operational costs when used together effectively. However, it’s one thing to have access to data; it’s another to make it actionable. With more data available than ever before, industries are presented with a new challenge.

Without question, the biggest obstacle facing widespread adoption of IoT and automation systems is interoperability. A McKinsey report estimates that achieving interoperability in IoT would unlock an additional 40 percent value in the total available market.

Data from smart devices is stored and communicated in many different formats. It has inconsistent, non-standard naming conventions and provides very limited descriptors to enable us to understand its meaning. Simply put, the data from smart devices and automation systems lacks information to describe its own meaning. Without meaning, a time-consuming normalization effort is required before that data can be used effectively to generate value. The result is that the data from today’s devices, while technically “available,” is hard to use, thus limiting the ability for parties to fully benefit from the value contained in the data[5].

In order to utilize data in value-added applications such as analytics, remote device management, and automation systems, we need to know the meaning of our data. For example, if we obtain data from a sensor point within a building automation system (BAS), it may include a value of 77.6.

[Figure 3 | Example sensor value]

We can’t do any effective analysis or processing until we first understand the data type of the value. Does the value represent temperature, speed, pressure, or some other data type?

[Figure 4 | Example value with data type semantics]

If the value represents a temperature, then we need to know whether it is 77.6 degrees Fahrenheit or degrees Celsius. Unit of measure is another essential descriptor we need in order to understand and use our data.

[Figure 5 | Example value with unit semantics]

Continuing with our example, if all we know is the data type (temperature) and unit of measurement (ºF), we still don’t know much about the significance of the value 77.6. Does the temperature value represent air, water, or some other environmental condition?

If it’s an air temperature for a floor within a building, it might be a bit warm for occupants. If it’s a water temperature for a boiler, it may be too cool.

[Figure 6 | Example value with object semantics]

Finally, if value represents an air temperature for a floor within a building, it might be fine when the building is unoccupied, but not when it’s occupied. So the date and time of the event is also important.

[Figure 7 | Example value with timestamp]

Let’s say the sensor with the value of 77.6 is identified by the name (i.e. identifier) “zn3-wwfl4”. If I am intimately familiar with the building system and the naming conventions used when it was installed, I may be able to determine that means Zone 3, West Wing, Floor 4. That would give me a bit of information to work with. If I know the building well, I may also be able to tell that the identifier “zn3-wwfl4” is the air temperature of a floor that is operated on occupancy schedule #1 (7:30 AM – 6:30 PM) and has an occupied cooling setpoint of 74 ºF.

Armed with this additional information I could determine that a value of 77.6 is not proper for 9:00 AM on a weekday – it’s too hot and will lead to occupant complaints. What enabled me to make that determination, however, was a significant amount of information about the meaning of the specific sensor. Information that I happened to have because of my personal knowledge of the building – but information that was not recorded in the control system (or any single data store), and is not available in any consistent “machine readable” format.

Herein is the challenge to using the wealth of data produced by today’s systems and devices – the ability to represent, communicate, and interpret the meaning of data. This “data about data” is often referred to as metadata or data semantics.

Having appropriate metadata about the sensor point would enable us to understand the impact of the current value of 77.6 without relying on personal knowledge of the system. As described above, if we knew the schedule associated with the location associated with the sensor, we could determine that it is over temperature during occupied hours and the occupant is most likely uncomfortable.

Without the necessary metadata, however, we can’t determine the impact of the current value and its relationship to proper system operation. So in order to provide effective use of data, we need to combine metadata with the sensor value. When done manually, this process is referred to as mapping or data normalization. This step in the utilization of time series data has historically been a time consuming manual process that adds significant cost to the implementation of new software applications such as analytics, remote device management, and automation systems.

Interestingly, with all of the power they have gained over the last decade and the adoption of standard communication protocols, most automation systems provide little to no ability to capture semantic information about the data they contain. There has been no standardized approach to representing the meaning of the data they generate or contain. The systems provide sensor points with an identifier (zn3-wwfl4), a value (77.6), and unit (ºF), but little other information. The result is that a labor-intensive process is required to “map” the data (data mapping) before any effective use of the sensor data can begin. Clearly, this creates a significant barrier to effective use of the data available from smart devices.

[Figure 8 | Data exchange and normalization]

By 2020, analysts predict that there will be over 25 billion connected devices in the IoT. Together, these devices will generate unprecedented volumes of data that must, in order to create value, be efficiently indexed, shared, stored, queried and analyzed.

Increasingly, this data is being normalized to time series data — data marked with a timestamp – and transmitted in regular intervals (interval-based) or upon state (or value) changes (event-based).

[Figure 9 | Time series and events]

While analytics applications only require unidirectional flow of time series data, automation systems require bi-directional data flow to transmit measured data from sensors and command messages to actuators.

[Figure 10 | Bi-directional data flow]

The metadata challenge

So how can we capture all of this information, share it, and associate it with the data elements in our automation systems and smart devices? We cannot do it simply by trying to use standardized point identifiers. Even in our simple example we have more metadata than can be effectively captured in a point identifier. Add to that the fact that we may want to add numerous other data elements over time that go far beyond our simple example and it’s obvious we need another approach. An effective solution needs to have the following characteristics:

- It should de-couple the point identifier from the representation of the associated metadata. The reality is that we have millions of points in thousands of systems and their point identifiers cannot be changed. It’s simply not an option – and it isn’t necessary. What is needed is a standardized model to associate metadata with the existing point identifiers.

- It should utilize a standardized library of metadata identifiers to provide consistency of metadata terminology. This will enable software applications to interpret data meaning without normalization. The library needs to be maintained by industry experts as new applications are introduced. The metadata methodology therefore needs to be extensible.

Cross-industry use cases

A key challenge to semantic interoperability is the ability to enable interoperability across different industries, each having its own environments and interoperability use cases. In the ensuing sections of this series, five inter-related industries – Homes & Buildings, Energy, Retail, Healthcare, and Transportation & Logistics – are addressed.

[Figure 11 | Industry use cases benefitting from semantic interoperability]

Part two identifies open source, community-driven approaches to solving these semantic interoperability challenges.

For term definitions, see the Glossary.

References:

1. “Oxford Dictionaries – Dictionary, Thesaurus, & Grammar.” Oxford Dictionaries | English. Accessed June 20, 2017. https://en.oxforddictionaries.com/.

2. Andreas, T., et al. “Applying the Levels of Conceptual Interoperability Model in Support of Integratability, Interoperability, and Composability for System-of-Systems Engineering.” Virginia Modeling Analyses & Simulation Center, Old Dominion University. Accessed June 20, 2017. http://www.iiisci.org/journal/cv$/sci/pdfs/p468106.pdf

3. “The Industrial Internet of Things Volume G5: Connectivity …” Accessed June 20, 2017. https://www.iiconsortium.org/pdf/IIC_PUB_G5_V1.0_PB_20170228.pdf

4. ReportsnReports. “M2M, IoT & Wearable Technology Market – 30% CAGR for Installed Base Connections by 2020.” PR Newswire: news distribution, targeting and monitoring. June 21, 2016. Accessed June 20, 2017. http://www.prnewswire.com/news-releases/m2m-iot–wearable-technology-market—30-cagr-for-installed-base-connections-by-2020-583815741.html.

5. Petze, John. “Describing the Meaning of Data—An Introduction to Data Semantics and Tagging”, Project Haystack Connections, Issue 1, Spring 2016.