Dynamic Memory Allocation - Just Say No

May 19, 2020

Blog

Increasingly, embedded software developers are realizing that dynamic memory allocation is fraught with problems.

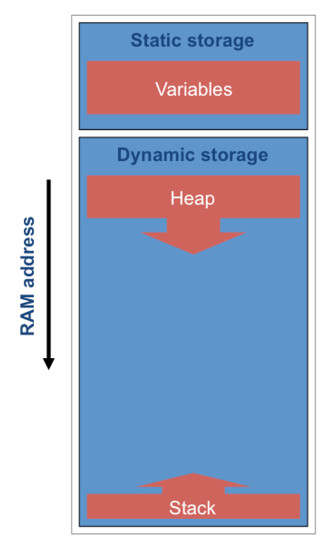

Increasingly, embedded software developers are realizing that dynamic memory allocation – grabbing chunks of memory as and when needed and relinquishing them later – while convenient and flexible, is fraught with problems. The issues are not confined to embedded code, many desktop applications exhibit memory leaks that impact performance and reliability. But here I want to focus on embedded.

There are three key reasons to question the use of the standard malloc() library function:

- Memory allocation can fail. This may be because there is insufficient memory available (in the heap) to fulfill the request. It may also be caused by fragmentation; there is enough memory available, but no contiguous chunks are is large enough.

- The function is commonly not reentrant. In a multi-threaded (multi-task) system, functions must be reentrant, if they are called by more than one task. This ensures that, if the call is interrupted, another call to the function will not compromise the first one.

- It is not deterministic. In a real-time system, predictability (determinism) is critical. The standard malloc() function’s execution time is extremely variable and impossible to predict.

These are all valid points and there are ways to address them, which are generally a matter of using the provided functionality of a real-time operating system (RTOS).

However, despite their validity, the problems may not always be as significant as they seem:

- The function returns a NULL pointer if allocation failure occurs. This is easy to check, and action may be taken.

- In many applications, all memory allocation and deallocation is performed in a single task. This renders reentrancy unnecessary.

- Not all embedded systems are real time, so determinism may not be required.

Another challenge may be presented by malloc(): it is rather slow. Some systems need speed, rather than predictability, so finding a way to provide this function’s capabilities, with greater performance, needs to be considered.

The primary reason for the function’s lackluster performance is that it provides a lot of functionality. The management of memory chunks of varying size is quite complex. For many applications, this is actually overkill, as required memory allocations are all the same size (or a small number of different, known sizes). It would be quite straightforward to write a memory allocator for fixed-size blocks; just an array with usage flags or maybe a linked list. The code would definitely be faster and could even be done deterministically. Allocation failure could still occur but is straightforward to manage. This type of memory allocation is commonly provided by popular RTOS products.