Extending networks to SoC IP saves power at the IoT edge

November 25, 2015

SoC architectures have remained largely the same over the last 20 years, with designers adding IP via the main CPU bus to create increasingly complex,...

SoC architectures have remained largely the same over the last 20 years, with designers adding IP via the main CPU bus to create increasingly complex, integrated chips. But as SoCs become more mainstream and adapt to power, performance, and time-to-market demands of IoT and other devices, new approaches are required that enable designers to iterate targeted solutions more quickly and effectively. In this interview, Drew Wingard, Co-Founder and CTO of IP design house Sonics Inc., describes how on-chip networks and high degrees of automation are helping vendors optimize IoT edge silicon and prepare for a new era of SoC development.

What can you tell us about Sonics and on-chip networking technology?

WINGARD: The key idea for Sonics as an IP company is that we should leverage networking technologies for trying to connect together the various IP blocks that make up an SoC. The general-purpose processor in many of these systems is just the controller and the piece that’s visible to the software developer for adding applications on top. But the reason we build an SoC is usually the other components on the chip that keep the customer from using an off-the-shelf microprocessor as the only processing element in the design. We have taken that system view that there is a reason that people are building an SoC – they’re building an SoC with extra-special hardware because they can do something more efficiently in this optimized hardware instead of just building an array of general-purpose CPUs.

We wanted to use networking technology because it allows us to do a couple of important things. One is, networks are very good at isolating components from each other, or decoupling, and that makes it a lot easier to mix and match. To take things that weren’t designed to work together and make them work together, because as long as they know how to work with the interface to the network, they’re protected from the rest of the system by the network itself. That’s very different from the approach that’s used in most other situations where people try to use computer buses. In the early days of SoCs people said, “we should just use this thing that’s coming out of the microprocessor, and we’ll just hook everything up to that.” That builds the opposite design philosophy from Sonics in that everything is massively tied to the processor. So if you want to change the frequency of the processor, then everything that talks to it has to run at a different rate. If you want to upgrade your next design from this generation of ARM processor to that generation of ARM processor and maybe they changed the width of the bus interface to increase the bandwidth from 32- to 64- to 128-bit data, now all of the IP blocks that attach to that interface have to be reworked. As we get to more advanced designs it becomes much, much worse, because as we look at battery-powered things or even things that plug into the wall today, power has become a huge concern, and we have to partition our designs into different pieces so we can slow them down or even shut them off. That implies playing with different clocking rates all over the design; that implies putting in place the appropriate electrical circuits to allow us to shut some parts off and still be safe for the parts that are on. All of those things fall apart whenever everything is tied together and we assume all communication is instantaneous and free.

The other thing we learned very early on is that these chips are very different from each other in many different ways because the top-level network is essentially the most personalized part of the SoC – you can’t design it until you know what components you’re going to hook up and what they’re trying to do. Sonics attacks that by making our networks incredibly configurable with lots of features but lots of flexibility since these are integrated circuits and nobody wants to pay any extra area for features they’re not using.

The core technology behind Sonics is this ability to try to adapt to the behavior of different IP blocks by using configurability to match the requirements of different systems. So we start with this very configurable hardware IP, which we’ve applied in a number of things we build such as on-chip networks, memory schedulers, and some power management technologies. To set up the configuration and to really explore the solution space of the chip being built, we couple that with a set of methodologies and tools, and that’s where a lot of the automation comes in, which helps designers in terms of preparing for the layout phase, helps them in terms of optimizing their architectures and configuring the interconnect and other shared resources like external memory bandwidth, optimizing performance, and doing the verification at the top level of the design. We wrap those two things together in what we call an Integration Architecture, which is a way of thinking about how to put these things together.

How are you seeing chip design unfold in IoT designs, and what advantages is on-chip networking yielding there?

WINGARD: For a lot of these smaller systems it’s a really fascinating situation where we’ve got devices that have incredibly low total compute power that are going to be gathering and transmitting relatively simple data, but where that data is incredibly valuable in some sense and really needs to be protected. There are all of these really interesting tradeoffs people are making, not at the level of a chip, not at the level of a single device, but rather to develop a whole interconnected system all the way up through the servers in the cloud to whatever might be in the fog, all the way to the smartphone that might be the local access point all the way to the edge about exactly where we’re going to do which parts of the computation. If we do this much here then we can save that amount of communication there and therefore that amount of communication power. It’s a really interesting space.

It turns out that we keep finding new reasons why markets like IoT, while from a total number of transistors perspective may not be as complex, are harder in other ways. The whole concept of an SoC where you’re going to put the whole system on a single chip implies that maybe at the end of the day there’s only one chip. “SoC” has almost become synonymous with the term “application processor,” which is putting the biggest digital part of the system other than the memory into a single chip. In IoT we’re going to get pretty close to getting the whole thing onto a single chip. Maybe we can’t get the sensors onto the same physical die, but they may well be stacked in the same physical package. But certainly we’re going to have to integrate whatever compute technology we need, whatever wireless communication technology we need, the interface to those sensors, whatever we need in terms of persistent memory, whatever we need in terms of dynamic memory. All of those things are going to have to be put onto a single die, and that’s a level of heterogeneous integration that puts stress on systems because it also has to be done at a very low cost.

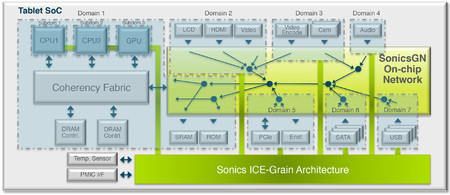

SonicsGN is a product designed around a fundamental new microarchitecture that allows us to scale up in frequency very high, up to and above 2 GHz, even in 28 nm processes technology. It’s designed to scale up and down to very large numbers of blocks. Like everything we do, it is very power efficient in terms of how it uses critical resources like clocks. One of the things that’s a side effect of the fact that the on-chip network spans such a large physical distance is that we also tend to have very long clock wires – because the clock tree that has to feed our logic has to get out to our logic, and that means it may have to span a long distance. So one of the things that’s important about how we try to minimize our power use is to build logic structures that are able to automatically determine when they are idle so they can reduce that clock distribution power. It turns out that that ends up being one of the biggest turns in the power dissipation of some of the networks that are out there.

We want to minimize the amount of this top-level network that ends up being powered all the time. If you look at SoC diagrams you’ll see various boxes that describe a set of domains, and you can imagine that these could be power domains where you can gate power off to different blocks on the chip. With SonicsGN you can not only gate off parts of the block but gate off parts of the network as well so you can have the smallest rational amount of the network in the part of the chip that gets power all the time when you’re in the deepest sleep mode. To make all that work we have power management interfaces that make it completely safe for an external power manager to both get notifications from us that we’re idle, but also then to request that we power down and provide notifications of when it’s a good time to power something up.

The other thing that’s interesting is that the on-chip network is the only piece of hardware that really understands the transactional state of the system. Because we own the fundamental address decoders that determine whether a request coming in from the LCD controller is going to the SRAM and not the DRAM, if the SRAM happens to be in a low-power state we could notify the power manager that it may want to wake the SRAM. It’s like the Wake-on-LAN (WoL) that’s built into the network interface card of your laptop, where we can be watching for things and waking up other parts.

In the spring we announced the ICE-Grain power architecture, which is that power manager. Today, most power management is done in software on the host processor, which has some nice characteristics because software is really flexible, but has one really bad characteristic in that the CPU has to be powered up in order to do any power state transitions. For some of these IoT devices, you really don’t want to do that because the CPU usually has the highest power leakage of anything on the chip. The idea with ICE-Grain is to move the detection, control, and notification of power management into hardware that can run autonomously. It still plugs into the operating system, but the operating system tells it relatively simple things like “you’re now in this mode,” and it is up to drivers and the ICE-Grain hardware to determine when to wake things up, when to shut them down, how far to shut them down, and to do that automatically. By doing that automatically we can do something really cool, which is allow people to build chips with many, many, many more individual domains that can be power controlled, whether it be slowing down the clock, stopping the clock, shutting off the voltage, or scaling the dynamic voltage and frequency down. We can get rid of the control processor as the bottleneck that prevents making millions of power state transitions per second.

We find that IoT chips actually need some of these capabilities, not because they have a gazillion transistors, but rather because they might need to have a bazillion power domains because the battery is so small that they’ve got to live for months on a tiny little battery. So the part of the chip that you can afford to leave going all the time due to leakage is so small that you have to partition this relatively small chip into still a large number of clock and power domains. It turns out that ends up making a lot of the work that we’ve done for bigger chips even more attractive.

What can we expect from SoC and IP design houses moving forward?

WINGARD: The other thing we see in IoT is we expect it won’t look like smartphones where a very small number of designs reach incredibly high volumes. Instead, we expect it’s going to be a lot of designs of more modest volumes that accumulate to be a much bigger number, but it does mean for silicon companies that in the era of SoCs started building huge design teams and trying to churn out one SoC every year, their model isn’t going to work. We’re going to have to go back to much smaller design teams that are iterating more quickly and more speculatively because the level of optimization required in IoT applications is so much higher. The baseband processor for a smartphone is designed to cover multiple end appliances. It has to cover multiple regions of the world; it has to cover different ways of attaching things; it has to accomplish, even within a single company like Samsung, a whole bunch of different form factors with different I/O requirements, etc. The level of overdesign associated with that will not be viable, I argue, within the IoT because of the form factor requirements and the small battery requirements. So we’re going to have to be more optimum for the actual device the chip is going into. That makes it exciting. It also means that in order to drive the development cost and design team size down to react to very fickle markets that only support really short design times – where the amount of time between when you know, “oh my gosh! I really need this thing!” until it better be out there is months, not years – means that a high level of automation about how you build these things is going to be required, both to keep the design team size down, but maybe more importantly to keep the design time down. And that’s a place where we’ve invested heavily. Because of the complexity of our solutions we were driven into this land of high automation, but now that we’re being applied in simpler contexts the automation benefit itself becomes a very big deal.

Sonics Inc.