Ensuring trust in IoT with Trusted Platform Modules and Trusted Brokered IO

November 29, 2015

While connecting previously isolated devices on the Internet of Things (IoT) yields countless possibilities, it has also forced industry to reconsider...

While connecting previously isolated devices on the Internet of Things (IoT) yields countless possibilities, it has also forced industry to reconsider how these seemingly harmless edge systems could be leveraged with malicious intent if not properly secured. In this interview with thought leaders from the Trusted Computing Group (TCG), Steve Hanna of Infineon and Stefan Thom of Microsoft discuss why security must begin at the hardware level, and explain how the TCG’s Trusted Platform Module (TPM) and Trusted Brokered IO specifications are evolving to meet the requirements of the IoT.

Give us some background on the Trusted Computing Group (TCG).

HANNA: The TCG has been around about a dozen years, and it was originally started by a group of IT security companies – Microsoft, IBM, HP, Intel, AMD, etc. – who are our founders and who recognized at the time that something more than just software security is necessary to properly secure information technology. They had the foresight to see at the time that the level of sophistication of attacks is always growing, and we needed to provide the best security possible and do it through a standardized mechanism.

The original focus was on the PC because those were the days of Slammer and all those nasty PC malwares and worms. The focus was, “how can we build into our PCs something that is going to be pretty much impervious to these sorts of clever malware attacks?” Yes, maybe you can crack the software and get into the operating system, but no, you’re not going to be able to get into the TPM. Then what we can do with the TPM once we have it (things like strong identity and a place to keep your cryptographic keys) and, a really special aspect of trusted computing, something that can provide confidence in the integrity of the software. Something where you can say, “how can we have confidence that this device has not been infected? How can we verify that remotely? And, how can we make sure that the secrets we really care about don’t fall into the hands of malware? That’s really a special aspect of this Trusted Computing technology – the ability to do those measurements and make sure that a particular device isn’t infected, and if it is, to detect that and be able to recover without substantial harm.

The TCG defined the standards for a variety of different hardware security components, such as the Trusted Platform Module (TPM), self-encrypting drives, trusted network communications, and the like. And, by defining standards for these it enables competition among the vendors that implement those standards, and it enables the vendors who make use of those standards to do so in a vendor-neutral manner (so you can build into Windows, as has been the case for the last several versions, support for the TPM and start building on top of it to create more advanced capabilities like BitLocker). That’s the sort of thing that TCG does – creating open standards for trusted computing, which is just another way to say secure computing, and we’ve adapted over the years as new forms of computing and new applications of computing have come along, such as the Internet of Things (IoT).

How would you define a hardware root of trust, such as a TPM?

HANNA: This is one of those things where people can argue about the nuances of the language, but the gist of it is this: a hardware root of trust is a fundamental capability upon which security has to be built. In order to have confidence in that hardware root of trust, you need to implement it in a way that’s really trustworthy. That’s why the TCG has defined certain standard roots of trust (a root of trust for measurement, a root of trust for storage, and so on and so forth), defined how they work, what the interfaces are, and then created the TPM certification program for a TPM implementing these certain roots of trust and meeting these certain security requirements through what’s called the Common Criteria Security Evaluation, an independent and impartial evaluation of the security of the TPM.

THOM: A hardware root-of-trust also comes in different flavors. So the stuff that we’re doing on Windows for phones where Qualcomm is providing a firmware TPM, which is a software flavor of security where there is no discrete TPM card that has been inspected, obviously when you evaluate the security of a TPM with the discrete part you get a lot stronger guarantees that are enforced in silicon rather than just by some software modus that the TPM operates on.

Now, for a regular consumer, while the firmware TPM may be absolutely okay, if you’re running a device in government or a government-regulated enterprise, the requirements might be much stronger in terms of tamper resistance and the possibility of attacking the root of trust. Because at the end of the day we all know that everything can be lifted, you just have to provide the right amount of money to actually get it out. If all you have to protect is your iTunes password, then a software or firmware TPM might be sufficient. If you’re protecting the social security numbers of half the country, then you probably need something more. And the interesting part here is that the TCG is working with so many different manufacturers that we have a lot different solutions that address the different market segments.

HANNA: That’s one of the nice things about the TPM is that you can buy products at different levels of assurance, different levels of security, that all implement those same APIs and the same operating system can work across those different products. Whether it’s a software, firmware, or hardware TPM, you’re getting an increasing level of assurance, and at the high end would be a hardware-certified TPM. You just decide based on your risk tolerance what level of security is most appropriate.

Sidebar 1 | AMD leverages Cortex-A5, TrustZone for scalable security across embedded portfolio

The Trusted Computing Group maintains a number of liaison relationships with other industry organizations to ensure interoperability, among them, the International Standards Organization (ISO), the Industrial Internet Consortium (IIC), and Global Platforms, the latter of which defines the Trusted Execution Environment standard that serves as the basis for ARM’s TrustZone technology. The advance of ARM SoCs in platforms ranging from mobile devices and wearables to embedded systems and server environments has grown the TrustZone ecosystem to one of the tech industry’s largest, as secure TrustZone IP blocks integrate with Cortex-A-class processors and extending throughout systems via the AMBA AXI bus.

After embarking on an “ambidextrous” strategy in 2014 that encompasses both x86- and ARM-based solutions, AMD has been quick to implement TrustZone technology across its portfolio, for example in its 6th generation APUs that offload security functions to dedicated Cortex-A5 cores capable of scaling to meet the size, power, and performance requirements of embedded systems. As Diane Stapley, Director of IHV Alliances at AMD notes, this platform security processor (PSP) implementation of hardware security has become a necessity, particularly as computation evolves to meet the requirements of mobile, cloud, and the IoT.

“A hardware root of trust in a CPU is the way to go,” Stapley says. “Integrating a Cortex-A5 in APUs is a dedicated space for cryptographic acceleration that is fed by firmware and provides access to APIs for the hardware. One of the things that has been done with the core is on-chip dedicated buses and memory space, so when you take I/O off device or through a system there are methods for using hardware-based encryption and checksum operations. This can be used for secure transactions in the IoT space, which implies communications across what would be an open channel.

“When we looked at the A5 it was a tradeoff of space on the die and performance/power, and as you can imagine we wanted a scalable architecture,” she continues. “We can use the core and modules around it for crypto- and firmware-based TPMs that can scale up and down, and it gives us a common base for our hardware root of trust, which is valuable for architects and our partners. For software partners, products based on the same core become ‘write-once, run anywhere’ because the APIs are common, and can be written to almost all other TrustZone products because those APIs are common as well.”

The Trusted Execution Environment leveraged in AMD’s PSP offerings is licensed from Trustonic (www.trustonic.com), an industry partnership that emphasizes mobile security, though over the next year the company has plans to expand this functionality to its client, server, graphics, and embedded product lines under the AMD Platform Security Processor umbrella. For more information visit the AMD website.

What does a TPM architecture look like?

HANNA: Standard interfaces, but as to the internals of how the TPM is implemented, that is up to the vendor to decide. So long as it implements the standard interfaces in the standard ways, passes the appropriate tests (including potentially security certification), then how you implement it is up to you, and that’s where we enable innovation. Some companies might already have some secure processing capability, some chip that they can use for this purpose. Others might be starting from scratch.

THOM: The bandwidth of the implemented security goes from the Common Criteria-certified devices all the way to the Raspberry Pi 2, and the Raspberry Pi doesn’t provide any security. However, we want to make sure that developers who sit on that platform have the ability to develop code against a TPM, even though it is not secure. So Microsoft is shipping a software TPM that has no security assurances whatsoever, but it provides all the mechanics and the interfaces to actually execute code and commands on the TPM itself. So with the software you can bring up on this really, really cheap board you can develop code based on this platform, and then when you productize it you can take the same code and put it on a platform that has a secure version of a TPM or put a discrete TPM on the Raspberry Pi and then work with that. The implementation at the bottom may change, but since the interfaces are the same you don’t have to make a dependency in your code development or go back to the drawing board with your code development because the interfaces are all the same.

HANNA: It’s really important for IoT in particular that we have this standardized interface in a variety of different implementations at different security and cost levels because that reflects the diversity of IoT. IoT is not just the home, it’s also the smart factory, it’s also transportation and self-driving cars and all these different application areas, each one of which has a different level of security that may be needed. Even within the home, the level of security you need in a light bulb versus a front door lock is probably different. But, if you can have the same APIs, the same interfaces, and the same software running on all of those, then you’ve reduced costs and put the security chip where it’s needed for greater security.

How does Trusted Brokered IO play into the whole root of trust concept?

THOM: Over the last decade or so, individual companies have been doing a more or less decent job of securing their own IP on a device in terms of making sure you cannot download their software or tamper with it, but this is an individual approach for every manufacturer. The SoC manufacturers give them the means to lock the flash down or turn the debug interface off, but it’s up to the developer to figure all of this out, to figure out how to secure their solution. That means that every device is handling this in a different way, so for an operating system that wants to control all of these smaller IoT devices, it’s impossible to look over a range of 20 devices and understand “what’s your identity, how can I interact with you, what version of software are you running, are you trusted or not” and so on.

So we took a step back and looked at the TPM, and the TPM has the same problem. We’re now below the TCG library specification. When a discrete TPM manufacturer builds a chip, the TPM library to a large degree is just code that runs on that chip, which has its own identity. If the TPM manufacturer makes an update to that code and provides an updated firmware to the TPM, the question is, “do I have to change the identity of this chip or not?” Is it still the same chip? Yes, it is, but it does something else. So the user of the product probably needs to get some notification that says there was a software update done to your device, and the device may be better suited to run in the future because it was a worthy software update, but it also could have been an attack. If somebody managed to flash bad firmware into a TPM that voided all security, then I most certainly want to know about it.

Since TPMs have the same problem and pretty much any single-chip IoT solution has the same issue on a chip level, how do we factor code into this on the lowest level? So we essentially condensed the capability of the TPM down to the absolute bare minimum, and that’s a specification that we’re writing right now called RTM, or roots of trust for measurement, that puts guidelines down for how identity is supposed to be done at a chip level and how immutable boot code is supposed to be done. We are working with software manufacturers and MCU manufacturers today to build prototypes of chips like this. The main drivers behind this undertaking are Microsoft and Google because we both have the same need of being able to interface with those chips and establish identities of those chips and what firmware is running in those chips. STMicro, on the other side, is involved to build the first prototype implementation of this in hardware.

What this builds is a platform foundation where, if you imagine, there is some bootrom code in the MCU and that bootrom code executes every time you turn it on – there is no way to power on around it. What this code does is take an identity from a register and hash the code that is stored in the chip into [the register], so we get an identity that consists of the hardware ID and the software. After this operation is done the hardware ID is made unavailable and then the code jumps to the actual application code that is flashed to the device.

The application can now use this compound identity with any caller and say, “look, this is my identity.” It can give the hash of the identity of chip and code to the caller, but since it does not have access to the identity anymore, it cannot derive any fake identity or fake a different software on the same hardware. The caller will take the digest of the compound identity and send that to the manufacturer and say, “here is what the chip claims to be. Is this a trusted piece of hardware with a trusted piece of software on it?” That would allow the manufacturer to then say, “Yes, I recognize the software because I know how the measurement is calculated,” and it will give a key back, and the key is a derivative of the compound identity specifically for the caller.

Now, the caller can essentially establish a trusted connection, because the chip would do exactly the same thing; it would be a shared derivation. And now we have a shared secret on both sides that can be used to communicate with that chip. If that chip is replaced or if the firmware is changed, the chip will no longer belong to that key, and therefore if I attack your hardware or attempt to flash something malicious into your chip, the key that I know is good that I use to talk to you no longer works for the other side. I can reestablish the connection, and I have to go through the attestation step again, but at the end of the day I have a secure channel into my piece of hardware. That’s the foundation for all of this.

What we have identified, especially in the IoT world, is that if we use a generic operating system like Windows and Linux to build, say, a furnace, we’ll have many GPIO pins. And let’s imagine that there is a fuel control valve and there’s an igniter. If I leave the policy of how these are supposed to interact with the dynamic operating system, then an attacker of the operating system can monkey in between the fuel control valve or igniter and the operating system and unhinge the policy. And if the policy is, “You shall only ignite if the gas was turned on no more than three seconds ago,” it’s a very important policy that needs to be enforced.

The idea is to take an MCU and code the policy into the MCU and hook it up to the hardware. So we’re binding functions together with policy in a piece of hardware that the higher operating system can interact with but not unhinge the hardware-based policy. Now you can say that when Windows wants to talk to its igniting unit, it either uses the key it established before or it establishes a new key, and it knows that last time we talked to the manufacturer this was a good processor, this is good code enforcing the right policy, so I can go ahead and turn this on. If it can’t talk to the igniter unit anymore, either it was updated with a good update and we have to go back through the attestation process, or it was a bad update and I definitely don’t want to call into it. So we now have another trust boundary between the operating system and several hardware parts on the board.

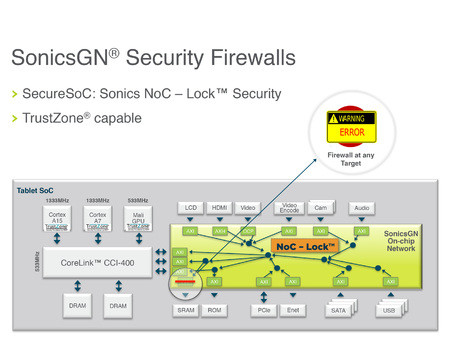

Sidebar 2 | Locking down shared resources in on-chip networks with NoC-Lock

As mentioned in Sidebar 1, ARM TrustZone technology has been widely adopted in the tech industry as foundation for securing systems based on a Trusted Execution Environment. Essentially, the way TrustZone works is by adding a mode bit to the operating context of the processor that provides information on whether a given instruction is run in secure mode or non-secure mode. The TrustZone instruction set can’t be run by regular programs, and enables every transaction performed by the CPU to be tagged with an identifier that indicates whether or not it is secure. In its most basic form this creates the equivalent of a simple firewall, where, for example, transactions labeled with the TrustZone bit set are able to pass into specified secure areas of the chip, such as the on-chip ROM, while non-TrustZone-authorized transactions have only limited access.

However, in the context of integrated SoCs with shared resources such as memory, this architecture can become quite complex, both in terms of establishing mutual trust from a software perspective, as well as in ensuring that containerized hardware blocks remain mutually secure but protected from other domains. Drew Wingard, CTO of IP design firm Sonics Inc. explains how his company’s NoC-Lock technology augments the security provisions of TrustZone to further isolate SoC blocks in such scenarios, helping minimize the risk of one compromised SoC block bringing down an entire chip.

“We take the bit that ARM defined in TrustZone, but we also allow security controllers that often exist on these chips to provide extra context information that also gets bundled with the transactions coming across the system to say, “this hardware block over here is participating in the streaming media container, so when you look at whether it should get access to this part of memory you should consider that carefully,” Wingard says. “We can essentially provide extra tags, and then the firewall we build called NoC-Lock interrogates the nature of the transaction – is it a read or a write, which hardware block does it come from, which security domain does it believe that it’s part of, and is it secure or not – and then compares that against the firewall of the program to determine whether or not access should be allowed.

“First of all, from the ground up we’re implementing flexible, parameterizable, configurable containers so that you can have multiple mutually secure domains for minimizing the risk of compromising the entire system,” he continues. “Secondly, we do all of our enforcement at the shared resource, so at the target rather than the initiator side, which has both a simpler software model and actually protects better against attacks. This implementation can also support protected reprogramming as well as exported or dynamically fused settings so that we can perfectly match the customer’s security needs. That has the benefit of allowing them to minimize their risk profile, but it also turns out that sometimes this can simplify what a secure boot process looks like.”

For more information on Sonics’ innovations in IP design, security, and on-chip networks, visit www.sonicsinc.com.

What’s on the horizon for TCG and its members?

HANNA: As co-chair of the IoT subgroup, I want to point out that we just released a document called “Guidance for Securing IoT Using TCG Technologies.”[1] That is an in-depth technical document that explains how to use TCG technologies for securing IoT, and we have a companion document, the architect’s guide for securing IoT[2], which provides a more high level overview, a four-pager suitable for briefing management and understanding the issue of how to secure IoT from a high level. And then we’ve got a whole bunch of next-gen efforts. One is the Automotive Thin Profile that’s specifically designed for lightweight automotive electronic control units (ECUs), but there are a whole bunch of next-gen standards that we’re working on specifically in the area of IoT that address some of the challenges of IoT that have not yet been standardized – for example, software and firmware updates. There are some things that already can be done easily using the TPM to secure that, but there are other things that really need a boost in capability, so we’re working actively on that.

THOM: Microsoft has put a lot of emphasis on the communication protocol AllJoyn, and what we’re looking at now is to use AllJoyn on the chip to take, for example, the attestation data or the identity data and represent that as AllJoyn objects on the chip. Then the device manufacturer can add more capabilities and define them as AllJoyn procedures or signals that are communicated through the encrypted channel that is established with the device key. So we’re coming to something that looks very much like an Arduino platform where you have an operating system and your AllJoyn layer and your RTM and your root of trust on the chip, and all the app developer does is provide the AllJoyn code on top of it. Then we can connect this thing to a Windows computer or Linux computer over any serial link and the operating system can just plug this into the AllJoyn root, and any application in the vicinity or on the local machine can interact with this trusted device. If it understands how RTM works, it can essentially obtain the identity, attain the measurement, and then go establish the key it can securely work with. So RTM and AllJoyn in that manner provide a wonderful relationship together because they enable each other to bring trust to the device.

Microsoft is really making sure that where our operating system runs we have the necessary hardware to ensure secure solutions, and in return that provides value for the entire industry. There’s open-source development for TPM code that then could also be picked up by alternate operating systems that want to take advantage of a hardware TPM or firmware TPM that may be present on a device. So, all in all, we’re making sure that this wild beach party that is IoT today gets some adult supervision. We’re putting the foundation down to actually build something trustworthy with those devices.

References

[1] Trusted Computing Group. “Guidance for Securing IoT Using TCG Technology, Version 1.0.” 2015 TCG. http://opsy.st/TCGIoTSecurityGuidance.

[2] Trusted Computing Group. “Architect’s Guide: IoT Security.” 2015 TCG. http://opsy.st/TCGIoTArchitectGuide.

Trusted Computing Group

Twitter: @TrustedComputin

LinkedIn: www.linkedin.com/groups/Trusted-Computing-Group-4555624

YouTube: www.youtube.com/user/TCGadmin

Infineon

www.infineon.com/Internet_of_Things

Twitter: @Infineon4Engi

Google+: plus.google.com/+Infineon4engineers

YouTube: www.youtube.com/user/InfineonTechnologies

Microsoft

www.microsoft.com/InternetOfYourThings

Twitter: @MicrosoftIoT

LinkedIn: www.linkedin.com/company/microsoft