Security for industrial control systems protects the national infrastructure

August 04, 2016

From power plants to factories to water supplies, industrial control systems are moving from proprietary and closed networks to become part of the Int...

From power plants to factories to water supplies, industrial control systems are moving from proprietary and closed networks to become part of the Internet of Things. Secure development processes can reduce software vulnerabilities within these critical infrastructures.

In a scenario that likely astounds most software developers, a computer worm has become a movie star. In the new action thriller Zero Days, the villain blows up chemical plants, shuts down nuclear power cooling systems, wrecks trains, switches off the national power grid, and more. The star of this fast-moving action flick is the notorious Stuxnet worm that was reportedly developed by US and Israeli intelligence to cripple Iran’s nuclear program. Stuxnet attacked the programmable logic controllers (PLCs) that control centrifuges within nuclear power plants, but the worm and its variants can be tailored to attack systems in a range of manufacturing, transportation, and utility operations.

The movie-going public has been largely unaware of the dangers that lurk within our connected infrastructure – dangers that have haunted engineers and developers. Typically, factory automation systems are run using supervisory control and data acquisition (SCADA) systems for human interface and access. The IEC61508 standard, along with its derivatives, is implemented to assure functional safety of the software that runs these systems in a broad range of industries. But these SCADA systems, typically Windows- or Linux-based, are in turn connected to corporate management, which needs access for inventory control, marketing, accounting, and a host of other purposes. These are, of course, linked to the external Internet and thus offer a perfect avenue for attack from outside. So while earlier closed systems depended on hapless or malicious individuals to manually install the worm, today’s Internet-connected industrial systems offer a new attack surface. Due to this high level of connectivity and risk of attack, no system can be considered safe if it is not also secure.

IEC61508 and its derivatives do not specifically address security. The developer needs to address issues of cybersecurity from the product development stage to post-market management. To comply with the various guidelines and requirements, developers must use adequate tools in order to deal with the high complexity. As these systems increasingly become subject to certification requirements, correctness in coding must be proven and documented along with the required functionality.

The heart of developing secure code is to devise a strategy, which can be based on cybersecurity guidelines such as those published by the National Institute of Standards and Technology (NIST), along with an organization’s own methods, and build them into the requirements document of the system under development. In addition, the project should adopt a coding standard such as MISRA C to guard against questionable methods and careless mistakes that can jeopardize security without being immediately apparent or even causing functional errors. The next big step, of course, is to make sure that this strategy and coding standard have actually been carried out effectively. Due to the size and complexity of today’s software, that can no longer be done manually and must make use of a comprehensive set of tools that can thoroughly analyze the code both before and after compilation.

Use traceability and analysis to verify security

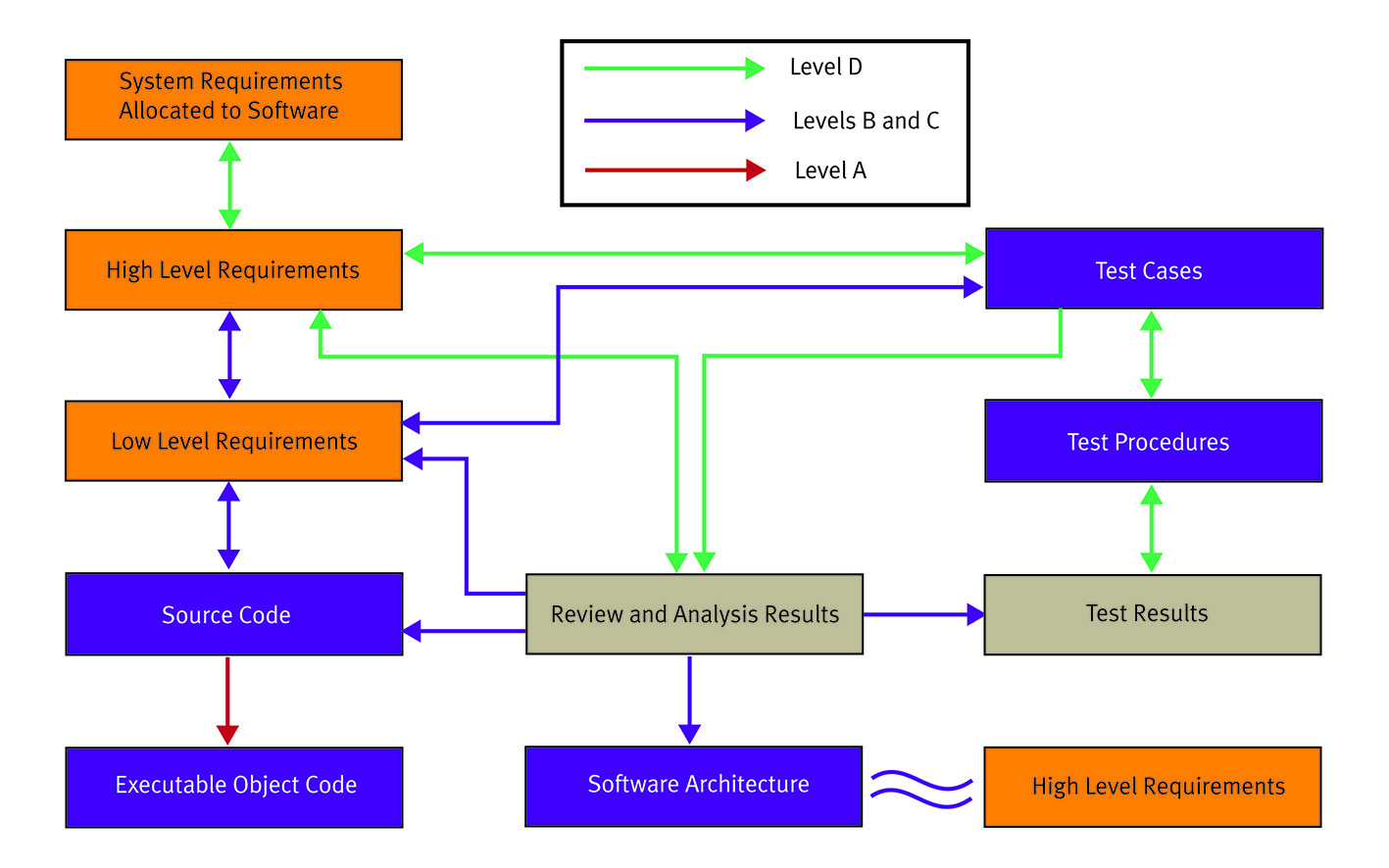

While defining requirements is the essential first step, there must be a well-defined method to trace and verify that the requirements have been met. Requirements traceability and management increases code quality and the overall safety, security, and effectiveness of the application. Bidirectional traceability, based on a requirements document, ensures that every high-level requirement is covered by one or more low-level requirements and that every low-level requirement links down into the code, verification activities, and the artifacts produced during the process. Likewise, those links must trace back upstream from the artifacts and code to the requirements, ensuring that any change at any stage in the process can be easily detected, understood, and managed appropriately (Figure 1).

[Figure 1 | A requirements traceability tool provides process transparency and is essential for determining impact analysis at all stages of the development process.]

[Figure 1 | A requirements traceability tool provides process transparency and is essential for determining impact analysis at all stages of the development process.]

A requirements traceability tool lets teams work on individual activities and link code and verification artifacts back to higher-level objectives. Three major capabilities under the supervision of two-way requirements traceability are applied early on and at successive stages of the development process. These are static analysis, dynamic analysis for functional test and structural coverage analysis, and unit/integration testing. The latter applies both static and dynamic analysis early in the development process and is applied to the later-integrated code as well.

Static and dynamic analysis partner for security

In assuring security, the two main concerns are data and control. Questions that must be considered include, who has access to what data? who can read from it and who can write to it? what is the flow of data to and from what entities? and how does access affect control? Here the “who” can refer to persons like developers and operators – and hackers – and it can also refer to software components either within the application or dwelling somewhere in a networked architecture. To address these issues, static and dynamic analysis must go hand in hand.

On the static analysis side, the tools work with the uncompiled source code to check the code for various quality metrics such as complexity, clarity, and maintainability. Static analysis can also be used to check the code against selected coding rules, which can be any combination of the supported coding standards such as MISRA C or CERT C, as well as any custom rules and requirements that the developer or a company may specify. The tools look for software constructs that can compromise security as well as check memory protection to determine who has access to which memory and to trace pointers that may traverse a memory location. Results should ideally be presented in graphical screen displays for easy assessment of results to assure clean, consistent, and maintainable code as well as coding standards compliance (Figure 2).

This is a process that can be done automatically by running the analysis tool with the coding standard definition against the application’s source code. Such code will almost certainly need to be modified to conform to the latest security requirements that have been added to MISRA C (Figure 2).

[Figure 2 | Coding standards compliance is displayed inline with file/function name to show which aspects of the system do not comply with the standard. Programming standards call graph shows a high-level, color-coded view of coding standards compliance of the system.]

[Figure 2 | Coding standards compliance is displayed inline with file/function name to show which aspects of the system do not comply with the standard. Programming standards call graph shows a high-level, color-coded view of coding standards compliance of the system.]

On the other hand, dynamic analysis tests the compiled code, which is linked back to the source code using the symbolic data generated by the compiler. Dynamic analysis, especially code coverage analysis, can provide tremendous insight into the effectiveness of the testing process. However, often developers attempt to manually generate and manage their own test cases. Working from the requirements document is the typical method of generating test cases and they may stimulate and monitor sections of the application with varying degrees of effectiveness, but given the size and complexity of today’s code, that will not be enough to achieve confidence the code is correct or to obtain any certifications or approvals that may be required.

Automatic test case generation can greatly enhance the testing process by saving time and money. However, effective test case generation is based on quality static analysis of the code. The information provided by static analysis helps the automatic test case generator create the proper stimuli to the software components in the application during dynamic analysis. Functional tests can be created manually to augment the automatically generated test cases to provide better code coverage and a more effective and efficient testing process. Manually created tests are typically generated from the requirements, i.e. requirements-driven testing. These should include any functional security tests such as simulated attempts to access control of a device or feed it with incorrect data that would change its mission. Functional testing based on created tests should include robustness such as testing for results of unallowed inputs and anomalous conditions. Furthermore, dynamic analysis provides not only code coverage but also data flow/control analysis, which in turn can be checked for completeness using two-way requirements traceability.

In addition to testing for compliance to standards and requirements, it is also necessary to examine any segments of what may be “software of unknown pedigree,” or SOUP code. For example, there is danger associated with areas of “dead” code that could be activated by a hacker or obscure event in the system for malicious purposes. Although it is ideal to start implementing security from the ground up, most projects include pre-existing code that may have what looks like just the needed functionality. Developers need to resist automatically pulling in such code—even code from the same organization— without subjecting it to exactly the same rigorous analysis used on their own code. Used together, static and dynamic analysis can reveal areas of dead code, which can be a source of danger or may just take up space. It is necessary to properly identify such code and deal with it, usually by eliminating it. As units are developed, tested, modified, and retested by teams of developers (possibly in entirely different locations), the test records generated from a comprehensive tool suite can be stored, shared, and used while units are integrated into the larger project.

In order for systems to be reliable and safe, they must also be secure. For that, they must be coded to comply not only with language rules, but also to adhere to well-defined strategies that assure safety and security. Applying a comprehensive suite of test and analysis tools to the development process of an organization greatly improves the thoroughness and accuracy of security measures to protect vital systems. It also smooths what can often be a painful effort for teams to work together for common goals and to have confidence in their final product. The resulting product will stand a much better chance for customer approval and, if needed, certification by authorities – and it is far less likely to become the star of the next blockbuster movie.