Simulation: Is it better than the real thing?

October 16, 2017

If a hardware design flaw is located late in the development process, it may be too late to fix it economically, so the only option is to accommodate the problem in software.

In my professional life, I have to be careful what I say—I’m a software engineer and a large proportion of my colleagues have a hardware design background. I wouldn’t say that these two disciplines are at war, but there’s always been a tension between hardware and software developers. In an embedded design, if something goes wrong, both parties assume that the other is at fault. Worse still, if a hardware design flaw is located late in the development process, it may be too late to fix it economically, so the only option is to accommodate the problem in software. And gosh, does that rankle.

So, I tend to regard hardware as a necessary evil that lets me run my software. Therefore, it shouldn’t come as a surprise to learn that a favorite technology of mine is simulation. Let’s first define the term. If someone says that they’re seeking a simulation solution, I always quiz them to ascertain exactly what they’re looking for. Broadly, a simulator for embedded software development is some kind of environment that enables software to be run without having the final hardware available. This can be approached in a number of ways:

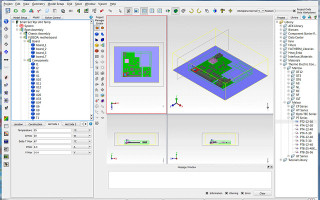

- Logic simulation: the hardware logic is simulated at the lowest (gate) level. Although this is ideal for developing the hardware, modeling a complete embedded system and executing code would be painfully slow.

- Hardware/software co-verification: using an instruction-set simulator (see previous bullet) linked to a logic simulator (see next bullet), a compromise in performance may be achieved. This makes sense as, typically, the CPU design is fully proven, so having a gate-accurate model is overkill.

- Instruction-set simulation (ISS): an ISS reads the code, instruction by instruction, and simulates the execution on the chosen CPU. Historically, this has been much slower than real time, but very precise and can give useful execution time estimates. However, with the amazingly powerful desktop computers we now have at our disposal, execution speeds are quite reasonable. Typically, the CPU simulator is surrounded by functional models of peripheral devices. This can be an excellent approach for debugging low-level code like drivers.

- Host (native) code execution: running the code on the host (desktop) computer delivers the maximum performance, often exceeding that of the real target hardware. For it to be effective, the environment must offer functional models of peripherals and relevant integration with the host computer’s peripherals (like networking, serial I/O, USB, etc.). For larger applications, this approach enables considerable progress to be made prior to hardware availability and offers an economic solution for larger, distributed teams.

These technologies are not competitive with one another. Each one offers a combination of precision and speed, which may be appropriate at different times in the design cycle. This can be visualized in the plot below.

You can change the rules and deviate from this nice relationship by cheating, like by using hardware acceleration, which is special electronics designed to turbo-charge the logic simulation. But that is no longer simulation; it’s emulation, which is another story.